Photo illustration: YouTube Flagging vs Flagging on Other Platforms

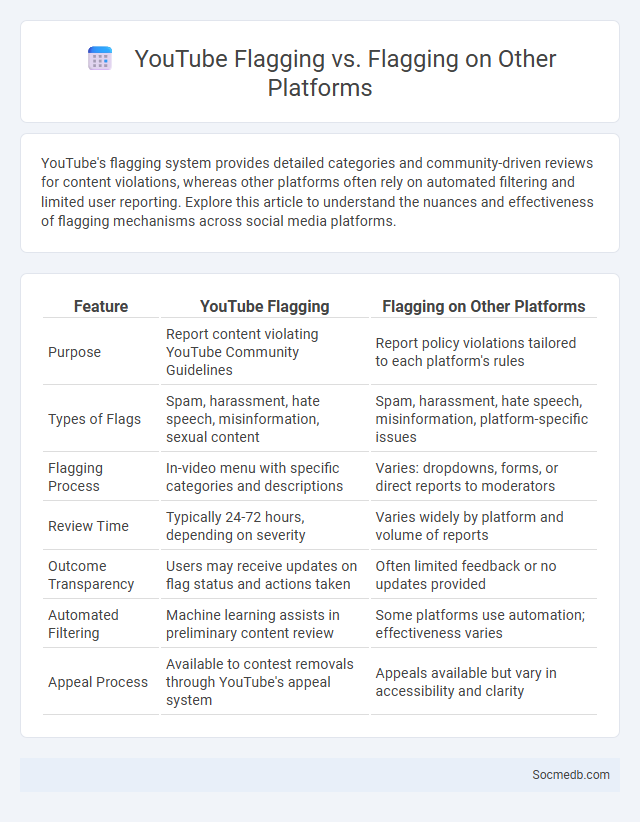

YouTube's flagging system provides detailed categories and community-driven reviews for content violations, whereas other platforms often rely on automated filtering and limited user reporting. Explore this article to understand the nuances and effectiveness of flagging mechanisms across social media platforms.

Table of Comparison

| Feature | YouTube Flagging | Flagging on Other Platforms |

|---|---|---|

| Purpose | Report content violating YouTube Community Guidelines | Report policy violations tailored to each platform's rules |

| Types of Flags | Spam, harassment, hate speech, misinformation, sexual content | Spam, harassment, hate speech, misinformation, platform-specific issues |

| Flagging Process | In-video menu with specific categories and descriptions | Varies: dropdowns, forms, or direct reports to moderators |

| Review Time | Typically 24-72 hours, depending on severity | Varies widely by platform and volume of reports |

| Outcome Transparency | Users may receive updates on flag status and actions taken | Often limited feedback or no updates provided |

| Automated Filtering | Machine learning assists in preliminary content review | Some platforms use automation; effectiveness varies |

| Appeal Process | Available to contest removals through YouTube's appeal system | Appeals available but vary in accessibility and clarity |

Understanding Content Flagging Across Platforms

Content flagging on social media platforms enables users to report inappropriate or harmful posts, helping maintain community standards. Each platform employs algorithms combined with human moderators to review flagged content swiftly and enforce policies against hate speech, misinformation, or explicit material. Understanding the differences in flagging mechanisms on platforms like Facebook, Twitter, and Instagram is crucial for effective content moderation and user safety.

How YouTube Flagging Works

YouTube flagging allows users to report content that violates community guidelines, such as hate speech, spam, or copyright infringement. When You flag a video, it is reviewed by YouTube's moderation team using machine learning algorithms and manual assessments to determine whether it should be removed, age-restricted, or demonetized. This system helps maintain a safe online environment by filtering harmful or inappropriate content promptly.

Flagging Mechanisms on Other Social Media Platforms

Flagging mechanisms on social media platforms like Facebook, Instagram, and Twitter enable users to report inappropriate content, hate speech, or misinformation quickly. These systems utilize algorithms combined with human moderators to review flagged posts, ensuring community guidelines are upheld and harmful content is removed promptly. Effective flagging contributes to safer online environments by empowering users to actively participate in content moderation.

Key Differences: YouTube Flagging vs Other Platforms

YouTube flagging primarily relies on automated systems combined with user reports to identify content violating community guidelines, whereas other social media platforms like Facebook and Twitter emphasize manual reviews alongside AI filters. YouTube's approach prioritizes strict copyright enforcement and detailed content categorization, while platforms such as Instagram focus more on user discretion and content removal notifications. The transparency of flagging outcomes on YouTube, including strike consequences and video removals, contrasts with the more ambiguous enforcement policies seen on other social networks.

User Experience: Reporting Content on YouTube

YouTube's user experience for reporting content is streamlined to enhance accessibility and efficiency, with clearly labeled options enabling users to identify issues such as inappropriate material, spam, or copyright violations. The reporting process guides users through specific categories and subcategories ensuring precise communication of concerns, which facilitates quicker moderation decisions. User feedback mechanisms and transparency reports further improve trust and allow continuous refinement of content policy enforcement on the platform.

Moderation and Review Processes Compared

Social media platforms employ distinct moderation and review processes tailored to their community guidelines and user base size, utilizing a combination of AI algorithms and human moderators to detect harmful content. Facebook implements a multi-tiered review system involving automated detection followed by human review for complex cases, whereas Twitter relies on real-time reporting and rapid response teams to address violations. Both platforms face challenges in balancing free expression with safety, necessitating ongoing updates to policies and technologies to improve accuracy and user trust.

Accuracy and Impact of Flags on YouTube vs Others

YouTube employs advanced machine learning algorithms and human reviewers to ensure the accuracy of content flags, resulting in a more reliable removal of misinformation and harmful videos compared to other social media platforms. The impact of flagging on YouTube is significant, with flagged content often facing immediate demonetization, age restrictions, or deletion, which helps maintain community guidelines and user trust. Other platforms like Facebook and Twitter rely heavily on user reports and automated systems but generally exhibit slower response times and less consistent enforcement, leading to varied effectiveness in controlling flagged content.

Transparency and Feedback After Flagging

Social media platforms enhance user trust by providing transparent processes and clear guidelines for flagged content, ensuring users understand why their posts are reported or removed. Prompt, detailed feedback helps users learn platform standards and encourages compliance with community guidelines. This transparency fosters a safer online environment, reducing misinformation and abusive behavior.

Challenges and Controversies in Content Flagging

Content flagging on social media platforms faces significant challenges such as inconsistent enforcement, false positives, and the sheer volume of posts requiring review. Automated algorithms often struggle to accurately detect nuanced hate speech, misinformation, or context-dependent content, leading to both over-censorship and under-enforcement. Controversies arise regarding transparency, potential bias in moderation practices, and the balance between free expression and harmful content removal.

Future Trends in Online Content Flagging Systems

Future trends in online content flagging systems emphasize the integration of AI-powered algorithms to enhance accuracy and reduce false positives in detecting harmful or misleading content. Your experience will improve as platforms adopt real-time monitoring tools that leverage machine learning to adapt to evolving content patterns swiftly. Enhanced transparency and user control will also become standard, allowing you to participate actively in content moderation processes while ensuring the protection of digital communities.

socmedb.com

socmedb.com