Photo illustration: YouTube Keywords Moderation vs User Moderation

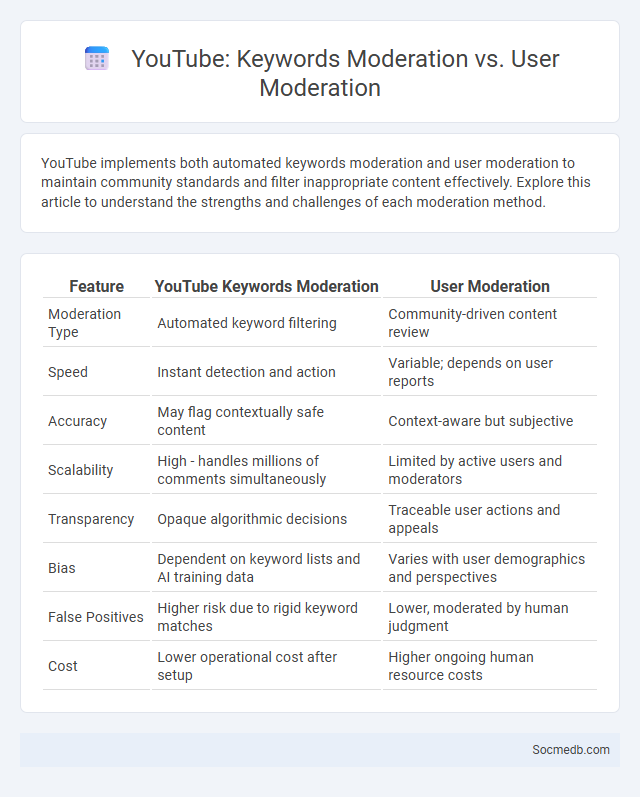

YouTube implements both automated keywords moderation and user moderation to maintain community standards and filter inappropriate content effectively. Explore this article to understand the strengths and challenges of each moderation method.

Table of Comparison

| Feature | YouTube Keywords Moderation | User Moderation |

|---|---|---|

| Moderation Type | Automated keyword filtering | Community-driven content review |

| Speed | Instant detection and action | Variable; depends on user reports |

| Accuracy | May flag contextually safe content | Context-aware but subjective |

| Scalability | High - handles millions of comments simultaneously | Limited by active users and moderators |

| Transparency | Opaque algorithmic decisions | Traceable user actions and appeals |

| Bias | Dependent on keyword lists and AI training data | Varies with user demographics and perspectives |

| False Positives | Higher risk due to rigid keyword matches | Lower, moderated by human judgment |

| Cost | Lower operational cost after setup | Higher ongoing human resource costs |

Introduction to YouTube Moderation Methods

YouTube moderation methods involve a combination of automated algorithms and human reviewers to maintain community guidelines and ensure a safe user environment. These techniques include AI-driven content filtering, real-time comment monitoring, and user reporting systems to identify inappropriate or harmful content. Your engagement is protected through continuous updates to moderation policies aimed at preventing misinformation, hate speech, and spam on the platform.

Understanding YouTube Keyword Moderation

YouTube keyword moderation plays a crucial role in managing user-generated content by filtering and controlling which keywords appear in comments, titles, and descriptions to maintain community guidelines and prevent spam or harmful language. This system uses automated algorithms combined with manual review to detect and block inappropriate, misleading, or offensive terms, ensuring safer interactions for creators and viewers. Effective keyword moderation enhances content discoverability by allowing positive and relevant keywords while minimizing the risk of content removal or channel penalties.

How User Moderation Works on YouTube

User moderation on YouTube involves community members actively participating in content regulation by reporting inappropriate videos, flagging comments, and utilizing automated filters to identify rule-violating material. Creators can assign trusted users as moderators to manage live chat and comment sections, ensuring compliance with YouTube's community guidelines. This collaborative approach enhances platform safety by combining algorithmic detection with human oversight to address spam, harassment, and harmful content efficiently.

The Role of Comment Moderation on YouTube

Comment moderation on YouTube plays a crucial role in maintaining a positive community environment by filtering harmful content such as spam, hate speech, and misinformation. Effective moderation tools empower creators to control conversations, enhance viewer engagement, and protect users from cyberbullying. You can optimize your channel's reputation and foster meaningful interactions by implementing strong comment moderation practices.

Pros and Cons of Keyword Moderation

Keyword moderation improves Your social media experience by filtering inappropriate or harmful content, enhancing community safety and maintaining platform integrity. However, it can sometimes lead to over-filtering, accidentally censoring benign posts and stifling genuine conversations. Balancing precise keyword algorithms with human oversight ensures effective moderation and user satisfaction.

Benefits and Drawbacks of User Moderation

User moderation on social media empowers communities to maintain platform integrity by enabling users to report inappropriate content and enforce community guidelines, enhancing user experience and platform safety. This decentralized approach increases the speed of content review and fosters a sense of ownership among users, but it can also lead to inconsistent enforcement and potential bias, affecting fairness and content diversity. Balancing user-driven moderation with automated systems and professional oversight is crucial to mitigate risks of censorship and ensure a respectful, inclusive online environment.

Comment Moderation: Strengths and Weaknesses

Effective comment moderation on social media helps maintain a positive community atmosphere by filtering out harmful content such as spam, hate speech, and harassment, ensuring that discussions remain respectful and relevant. However, automated moderation tools may struggle with context and nuance, sometimes flagging legitimate comments as inappropriate while failing to catch subtle violations. Balancing between strict policies and user expression is crucial for your platform's credibility and user engagement.

Comparing Effectiveness: Keywords vs User vs Comment Moderation

Social media strategies show varied effectiveness between keyword filtering, user moderation, and comment moderation, with keyword filtering efficiently blocking offensive language but sometimes missing context-sensitive issues. User moderation excels in fostering community trust by empowering participants to flag inappropriate behavior, though it requires active engagement to maintain quality control. Your choice should balance automation and human oversight to optimize content quality and user experience.

Best Practices for Combining Moderation Tools

Combining moderation tools effectively enhances your social media management by ensuring timely identification and resolution of harmful content. Integrating AI-powered filters with human review creates a balanced approach that improves accuracy while maintaining community trust. Leveraging analytics from these tools helps you refine policies and protect your brand reputation across platforms.

Choosing the Right Moderation Strategy for Your Channel

Selecting an effective moderation strategy for your social media channel is crucial for fostering a positive community and protecting brand reputation. Implementing a combination of automated filters, keyword blocking, and human moderation ensures timely removal of harmful content while maintaining authentic engagement. Analyzing platform-specific user behavior and community guidelines helps tailor the approach, balancing user freedom with safety and compliance.

socmedb.com

socmedb.com