Photo illustration: Facebook Bullying Moderation vs Instagram Bullying Moderation

Facebook employs advanced AI algorithms and human moderators to detect and remove bullying content, focusing on nuanced context and real-time intervention. Instagram enhances its bullying moderation by leveraging image recognition and encouraging user reporting, creating a supportive community environment. Discover the detailed differences and effectiveness of bullying moderation on these platforms in this article.

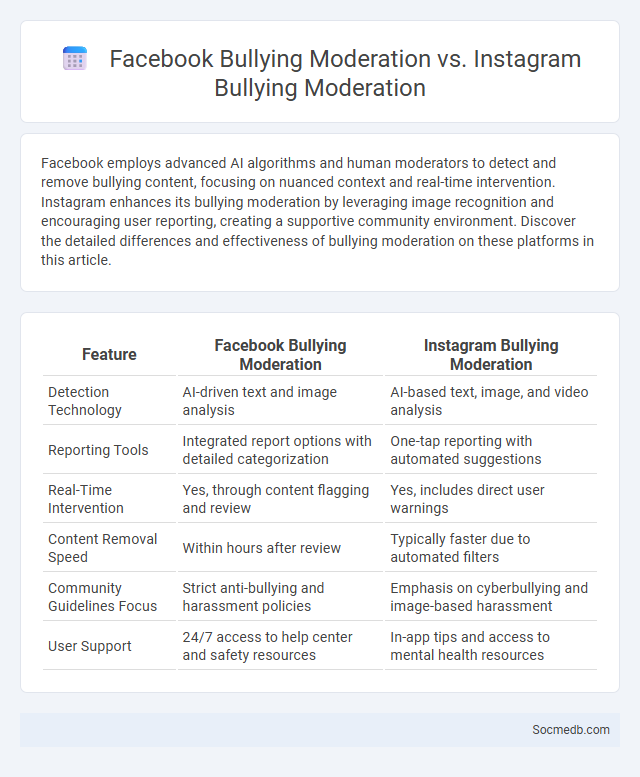

Table of Comparison

| Feature | Facebook Bullying Moderation | Instagram Bullying Moderation |

|---|---|---|

| Detection Technology | AI-driven text and image analysis | AI-based text, image, and video analysis |

| Reporting Tools | Integrated report options with detailed categorization | One-tap reporting with automated suggestions |

| Real-Time Intervention | Yes, through content flagging and review | Yes, includes direct user warnings |

| Content Removal Speed | Within hours after review | Typically faster due to automated filters |

| Community Guidelines Focus | Strict anti-bullying and harassment policies | Emphasis on cyberbullying and image-based harassment |

| User Support | 24/7 access to help center and safety resources | In-app tips and access to mental health resources |

Overview of Facebook and Instagram Bullying Moderation

Facebook and Instagram utilize advanced AI algorithms and human moderators to detect and address bullying, aiming to create safer online environments. These platforms employ automated systems to identify harmful language and behaviors quickly, while offering users tools to report abuse and control interactions. You can access features that filter offensive comments and receive support resources, ensuring more positive social media experiences.

Key Differences Between Facebook and Instagram Bullying Policies

Facebook's bullying policies emphasize detailed community standards with extensive reporting and support tools targeting harassment and hate speech. Instagram focuses on visual content moderation, using AI to detect harmful images and comments, with features like comment filtering and restrict mode to protect users. Your safety depends on understanding that Facebook prioritizes text-based interactions, while Instagram tailors its approach to photo and video sharing environments.

Content Moderation: Definition and Importance

Content moderation involves the process of monitoring, evaluating, and managing user-generated content on social media platforms to ensure compliance with community guidelines and legal regulations. Efficient content moderation protects users from harmful, offensive, or misleading content while maintaining a safe and trustworthy online environment. Social media companies invest heavily in AI-driven algorithms and human moderators to balance free expression with the prevention of misinformation, hate speech, and cyberbullying.

Automated Tools Used for Bullying Detection

Automated tools for bullying detection on social media leverage advanced machine learning algorithms and natural language processing to identify harmful content in real time. These systems analyze patterns such as aggressive language, hate speech, and cyberbullying indicators across platforms like Facebook, Twitter, and Instagram. Your online safety is enhanced by these technologies, which enable prompt intervention and reduce the spread of abusive behavior.

Human Moderators: Roles Across Platforms

Human moderators play a crucial role in maintaining online community standards by reviewing content for violations of platform policies on social media sites such as Facebook, Twitter, and Instagram. They assess user reports, enforce guidelines related to hate speech, misinformation, and graphic content, ensuring a safe and respectful environment. Human moderators complement AI tools by providing nuanced judgment and contextual understanding that algorithms often lack.

Reporting and Appeals Processes Compared

Reporting and appeals processes on social media platforms vary significantly in transparency, response time, and user control, affecting how effectively harmful content is managed. Platforms like Facebook and Twitter offer in-app reporting tools with automated filters and human reviews, while appeal mechanisms differ, with some providing detailed feedback and others limited options. Understanding these processes empowers you to navigate disputes more efficiently and ensures your concerns are addressed promptly on the social networks you use.

Effectiveness of AI in Bullying Moderation

AI-driven algorithms enhance social media platforms by rapidly detecting and moderating bullying through natural language processing and machine learning techniques. These advanced systems analyze vast amounts of user-generated content, identifying harmful behavior with high accuracy while adapting to new patterns of online harassment. Your online experience becomes safer as AI continuously evolves to prevent the spread of abusive language and protect vulnerable users.

User Privacy and Data Handling in Moderation

User privacy is a critical concern in social media platforms, requiring robust data handling practices to protect sensitive information during content moderation. Effective moderation relies on AI algorithms and human reviewers, necessitating clear policies to balance transparency with confidentiality. Compliance with regulations such as GDPR ensures user data is processed securely, minimizing risks of unauthorized access or misuse.

Community Guidelines: Facebook vs. Instagram

Facebook and Instagram enforce distinct Community Guidelines tailored to their platforms, with Facebook emphasizing content that promotes meaningful interactions and prohibits hate speech, misinformation, and harmful behavior, while Instagram focuses on preventing bullying, harassment, and inappropriate content to maintain a positive visual experience. Your understanding of these guidelines ensures safe and responsible engagement, as both platforms actively monitor and remove content violating their policies using advanced AI and human review. Compliance with Facebook's broader scope and Instagram's visual-centric rules helps protect your account and fosters a respectful online community.

Future Trends in Social Media Content Moderation

Future trends in social media content moderation emphasize increased use of artificial intelligence and machine learning to detect harmful content more accurately. Your platforms will implement real-time moderation tools that adapt to evolving language and context, reducing misinformation and toxic behavior. Enhanced transparency and user control over moderation processes will become standard to foster trust and safety in online communities.

socmedb.com

socmedb.com