Photo illustration: Facebook Community Standards vs Google Content Policy

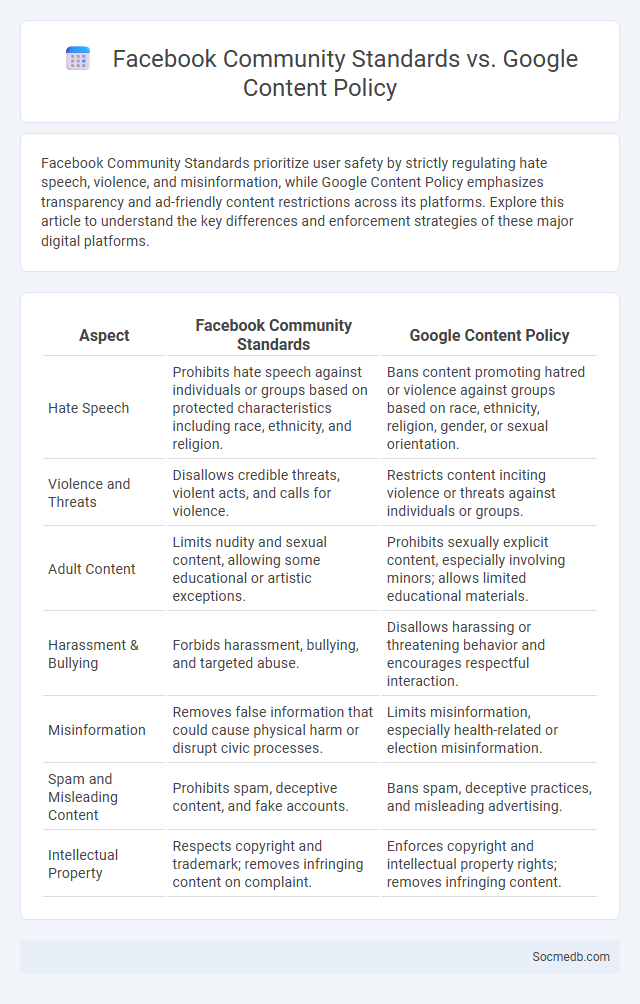

Facebook Community Standards prioritize user safety by strictly regulating hate speech, violence, and misinformation, while Google Content Policy emphasizes transparency and ad-friendly content restrictions across its platforms. Explore this article to understand the key differences and enforcement strategies of these major digital platforms.

Table of Comparison

| Aspect | Facebook Community Standards | Google Content Policy |

|---|---|---|

| Hate Speech | Prohibits hate speech against individuals or groups based on protected characteristics including race, ethnicity, and religion. | Bans content promoting hatred or violence against groups based on race, ethnicity, religion, gender, or sexual orientation. |

| Violence and Threats | Disallows credible threats, violent acts, and calls for violence. | Restricts content inciting violence or threats against individuals or groups. |

| Adult Content | Limits nudity and sexual content, allowing some educational or artistic exceptions. | Prohibits sexually explicit content, especially involving minors; allows limited educational materials. |

| Harassment & Bullying | Forbids harassment, bullying, and targeted abuse. | Disallows harassing or threatening behavior and encourages respectful interaction. |

| Misinformation | Removes false information that could cause physical harm or disrupt civic processes. | Limits misinformation, especially health-related or election misinformation. |

| Spam and Misleading Content | Prohibits spam, deceptive content, and fake accounts. | Bans spam, deceptive practices, and misleading advertising. |

| Intellectual Property | Respects copyright and trademark; removes infringing content on complaint. | Enforces copyright and intellectual property rights; removes infringing content. |

Introduction to Online Content Policies

Online content policies establish clear guidelines for acceptable behavior and content across social media platforms to ensure safe and respectful user interactions. These policies address issues such as hate speech, misinformation, harassment, and copyright infringement, helping platforms maintain legal compliance and protect user rights. Understanding and adhering to these regulations is essential for users and creators to foster a trustworthy and inclusive digital environment.

Overview of Facebook Community Standards

Facebook Community Standards define the rules that govern user behavior and content to maintain a safe and respectful environment. These standards address issues such as hate speech, harassment, misinformation, graphic content, and privacy violations to protect your experience. Enforcement includes content review and penalties ranging from warnings to account suspension based on severity and repeated offenses.

Key Features of Google Content Policy

Google Content Policy emphasizes user safety, prohibiting hate speech, harassment, and harmful misinformation across social media platforms. It mandates transparency in content moderation and enforces strict rules against illegal activities, adult content, and deceptive behavior. The policy supports the promotion of authentic user interactions while protecting intellectual property and user privacy.

Defining Generic Community Standards

Generic community standards on social media establish fundamental guidelines that promote respectful interaction, prohibit hate speech, harassment, and misinformation, while ensuring user safety and content appropriateness. These standards are crafted to create an inclusive digital environment by balancing freedom of expression with the necessity to prevent harmful behaviors. Enforcement mechanisms rely on automated detection algorithms and human moderation to maintain platform integrity and compliance with legal regulations.

Comparison: Scope and Coverage

Social media platforms vary significantly in scope and coverage, with Facebook offering a broad user base spanning diverse demographics, while LinkedIn focuses specifically on professional networking and career development. Instagram emphasizes visual content appealing to younger audiences, whereas Twitter prioritizes real-time news and concise communication. Understanding these distinctions helps you select the most effective platform for your marketing or personal engagement goals.

Approach to Hate Speech and Harassment

Social media platforms implement comprehensive policies to detect, moderate, and remove hate speech and harassment, leveraging AI algorithms combined with human review to ensure accuracy. User reporting systems and proactive monitoring identify harmful content, while educational campaigns promote respectful communication and digital civility. Enforcement measures include content removal, account suspension, and collaboration with law enforcement to address severe violations effectively.

Handling Misinformation and Fake News

Handling misinformation and fake news on social media requires critical evaluation of sources and fact-checking before sharing content. You can protect your digital reputation by recognizing misleading headlines, verifying information through trusted news outlets, and using platform tools designed for reporting false content. Engaging responsibly helps curb the spread of false narratives and promotes a more informed online community.

Content Moderation and Enforcement Mechanisms

Effective content moderation on social media platforms involves a combination of AI-driven algorithms and human moderators to identify and remove harmful or inappropriate content swiftly. Enforcement mechanisms include automated flagging systems, user reporting tools, and graduated penalties such as content removal, account suspension, or permanent bans. These strategies help maintain community standards, reduce the spread of misinformation, and protect users from harassment and abuse.

Impact on Users and Content Creators

Social media platforms significantly influence users' mental health by shaping self-esteem and social interactions, often leading to both positive connections and increased anxiety. Content creators benefit from expanded audience reach and monetization opportunities, yet face challenges such as algorithmic pressures and content saturation. Data shows that over 50% of users experience emotional effects related to social media use, highlighting the complex impact on digital communities.

Future Trends in Content Policies and Community Standards

Social media platforms will increasingly implement AI-driven content moderation to detect and remove harmful or misleading information more efficiently. You can expect policies to emphasize transparency, user privacy, and tailored community standards that reflect diverse cultural norms and foster inclusive online environments. Platforms will also adopt stricter regulations to combat misinformation, hate speech, and digital harassment, ensuring safer user experiences.

socmedb.com

socmedb.com