Photo illustration: Facebook Community Standards vs Twitter Rules

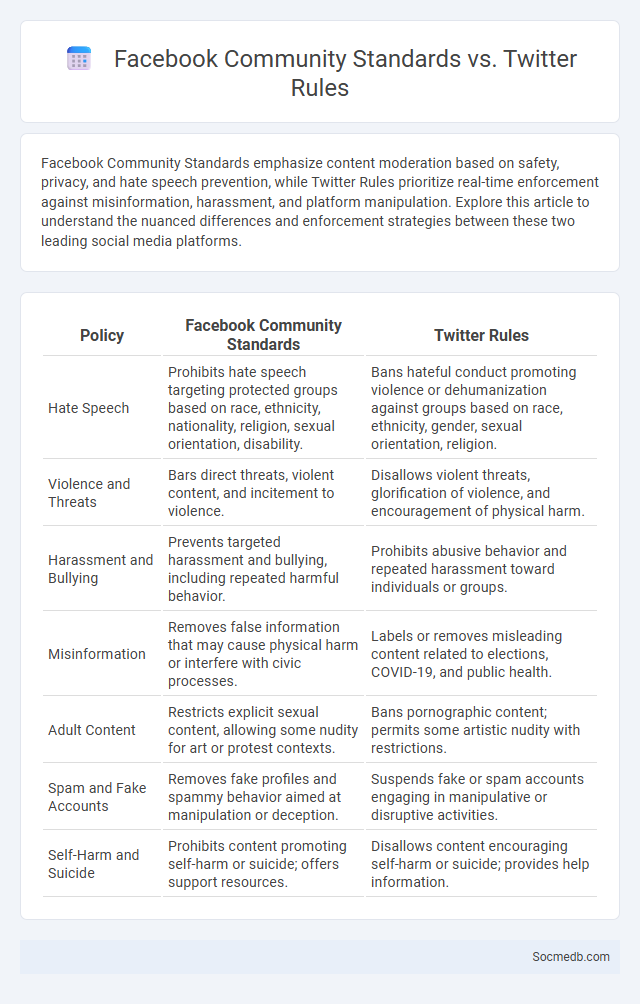

Facebook Community Standards emphasize content moderation based on safety, privacy, and hate speech prevention, while Twitter Rules prioritize real-time enforcement against misinformation, harassment, and platform manipulation. Explore this article to understand the nuanced differences and enforcement strategies between these two leading social media platforms.

Table of Comparison

| Policy | Facebook Community Standards | Twitter Rules |

|---|---|---|

| Hate Speech | Prohibits hate speech targeting protected groups based on race, ethnicity, nationality, religion, sexual orientation, disability. | Bans hateful conduct promoting violence or dehumanization against groups based on race, ethnicity, gender, sexual orientation, religion. |

| Violence and Threats | Bars direct threats, violent content, and incitement to violence. | Disallows violent threats, glorification of violence, and encouragement of physical harm. |

| Harassment and Bullying | Prevents targeted harassment and bullying, including repeated harmful behavior. | Prohibits abusive behavior and repeated harassment toward individuals or groups. |

| Misinformation | Removes false information that may cause physical harm or interfere with civic processes. | Labels or removes misleading content related to elections, COVID-19, and public health. |

| Adult Content | Restricts explicit sexual content, allowing some nudity for art or protest contexts. | Bans pornographic content; permits some artistic nudity with restrictions. |

| Spam and Fake Accounts | Removes fake profiles and spammy behavior aimed at manipulation or deception. | Suspends fake or spam accounts engaging in manipulative or disruptive activities. |

| Self-Harm and Suicide | Prohibits content promoting self-harm or suicide; offers support resources. | Disallows content encouraging self-harm or suicide; provides help information. |

Understanding Facebook Community Standards

Facebook Community Standards establish clear rules to maintain a safe and respectful environment, addressing issues like hate speech, harassment, and misinformation. These guidelines are continuously updated to reflect evolving social norms and legal requirements, ensuring user protection and platform integrity. Violations can result in content removal, account suspension, or permanent bans, emphasizing the importance of compliance for all users.

Overview of Twitter Rules

Twitter Rules establish clear guidelines to maintain a safe and respectful platform, prohibiting hate speech, harassment, and misinformation. Enforcement includes content removal, account suspension, and permanent bans for repeated violations. These policies aim to promote healthy conversations while protecting users from harmful behavior.

What Are General Community Standards?

General community standards on social media are guidelines designed to create a safe and respectful online environment by prohibiting hate speech, harassment, and harmful content. These standards help protect your experience by moderating posts, comments, and user behavior to ensure compliance with platform policies. Understanding these rules enables you to engage responsibly while avoiding penalties such as content removal or account suspension.

Key Differences Between Facebook and Twitter Policies

Facebook's policies emphasize detailed community standards with strict guidelines on hate speech, misinformation, and content removal, applying a broad approach to user privacy and data usage. Twitter prioritizes real-time communication rules, focusing heavily on combating harassment, misinformation, and platform manipulation through rapid content moderation and user suspension. Both platforms enforce policies distinctly tailored to their user engagement models, with Facebook leveraging extensive content filters and Twitter emphasizing dynamic interaction oversight.

Content Moderation Approaches: Facebook vs. Twitter

Facebook employs a hybrid content moderation approach combining advanced AI algorithms with extensive human review teams to manage billions of daily interactions across its platform. Twitter, now rebranded as X, utilizes a more agile moderation model emphasizing real-time AI detection complemented by user reporting tools to address misinformation and harmful content swiftly. Both platforms invest heavily in machine learning technologies, though Facebook's scale requires larger dedicated policies and regional adaptations compared to Twitter's more centralized framework.

Hate Speech and Harassment Policies: A Comparison

Social media platforms enforce Hate Speech and Harassment Policies to mitigate online abuse and foster safe user environments. Facebook employs AI-driven detection and human review to identify hate speech, prohibiting content targeting protected characteristics, while Twitter emphasizes user reporting and suspension of repeat offenders. Instagram combines automated filters and community guidelines to address harassment, promoting respectful interactions through content removal and banning violators.

Misinformation Handling: Facebook vs. Twitter

Facebook employs comprehensive misinformation handling strategies including AI-driven content analysis, third-party fact-checkers, and user reporting systems to limit false information spread. Twitter utilizes real-time content moderation, warning labels on misleading tweets, and suspension of repeat offenders to maintain information integrity. Both platforms continuously update their algorithms to improve detection accuracy and reduce misinformation's impact on users.

Privacy and User Data Protection Standards

Social media platforms implement strict privacy and user data protection standards to safeguard personal information from unauthorized access and misuse. Encryption protocols, regular security audits, and transparent data usage policies ensure compliance with regulations like GDPR and CCPA. These measures build trust by giving users control over their data through customizable privacy settings and consent options.

Enforcement Measures and User Appeals

Social media platforms implement enforcement measures such as content removal, account suspension, and algorithmic moderation to combat misinformation and harmful behavior. Users can appeal enforcement decisions through dedicated support channels, often involving a review process by moderation teams or automated systems. Transparent policies and timely responses are critical for maintaining trust and fairness in user appeal mechanisms.

Impact on User Experience and Online Communities

Social media platforms shape user experience by personalizing content feeds through advanced algorithms, boosting engagement and satisfaction. Online communities thrive as these platforms enable real-time communication, fostering connections and collective identity among members. By understanding these dynamics, your interaction with social media can become more meaningful and positive.

socmedb.com

socmedb.com