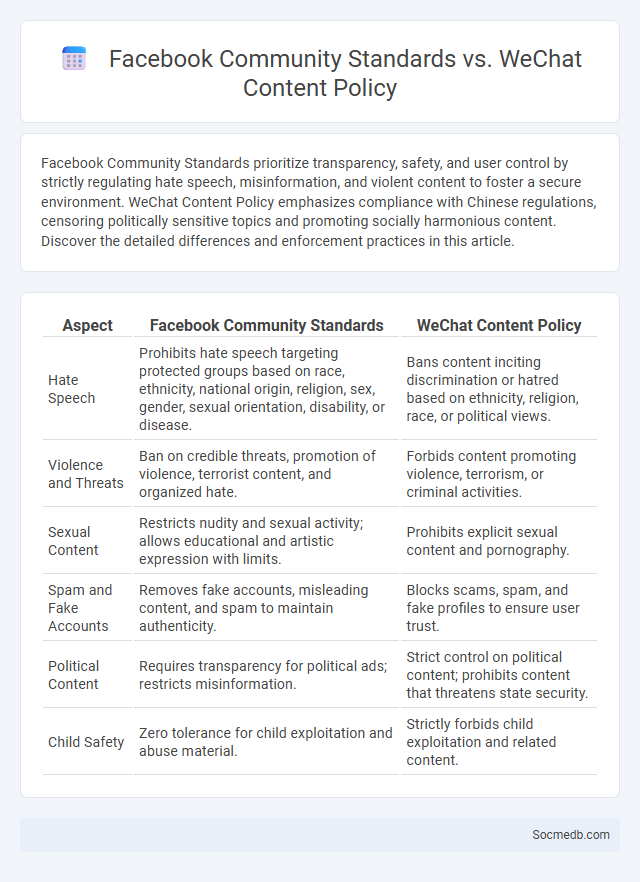

Photo illustration: Facebook Community Standards vs WeChat Content Policy

Facebook Community Standards prioritize transparency, safety, and user control by strictly regulating hate speech, misinformation, and violent content to foster a secure environment. WeChat Content Policy emphasizes compliance with Chinese regulations, censoring politically sensitive topics and promoting socially harmonious content. Discover the detailed differences and enforcement practices in this article.

Table of Comparison

| Aspect | Facebook Community Standards | WeChat Content Policy |

|---|---|---|

| Hate Speech | Prohibits hate speech targeting protected groups based on race, ethnicity, national origin, religion, sex, gender, sexual orientation, disability, or disease. | Bans content inciting discrimination or hatred based on ethnicity, religion, race, or political views. |

| Violence and Threats | Ban on credible threats, promotion of violence, terrorist content, and organized hate. | Forbids content promoting violence, terrorism, or criminal activities. |

| Sexual Content | Restricts nudity and sexual activity; allows educational and artistic expression with limits. | Prohibits explicit sexual content and pornography. |

| Spam and Fake Accounts | Removes fake accounts, misleading content, and spam to maintain authenticity. | Blocks scams, spam, and fake profiles to ensure user trust. |

| Political Content | Requires transparency for political ads; restricts misinformation. | Strict control on political content; prohibits content that threatens state security. |

| Child Safety | Zero tolerance for child exploitation and abuse material. | Strictly forbids child exploitation and related content. |

Introduction to Social Media Platform Regulations

Social media platform regulations encompass the legal and policy frameworks that govern online content, user behavior, and data privacy across major networks like Facebook, Twitter, and Instagram. These regulations are designed to address issues such as misinformation, hate speech, copyright infringement, and user protection while ensuring compliance with regional laws like the GDPR and CCPA. Enforcement mechanisms often involve automated content moderation, user reporting systems, and partnerships with regulatory bodies to maintain safe and accountable digital environments.

Overview of Facebook Community Standards

Facebook Community Standards outline clear guidelines to maintain a safe and respectful environment across the platform. These standards address key areas such as hate speech, violence, nudity, misinformation, and harassment, ensuring content compliance with legal and ethical norms. Enforcement involves content removal, account restrictions, and penalties to protect users and promote authentic interactions.

Key Features of WeChat Content Policy

WeChat's Content Policy enforces strict regulations on user-generated content, emphasizing the prohibition of politically sensitive materials, misinformation, and harmful or illegal activities. It incorporates advanced AI algorithms and human review systems to detect and remove content violating guidelines related to hate speech, violence, and explicit material. The policy ensures compliance with China's cybersecurity laws while maintaining platform safety and fostering a responsible digital environment.

Comparing Enforcement Mechanisms

Social media platforms implement diverse enforcement mechanisms to regulate user behavior, including algorithmic content detection, user reporting systems, and human moderation teams. Facebook employs AI-driven content filtering combined with human reviewers to handle violations, whereas Twitter relies heavily on user reports supplemented by automated flags and a dedicated safety team. YouTube integrates machine learning algorithms to detect harmful content alongside community flagging, aiming for swift removal and policy enforcement.

Content Moderation: Similarities and Differences

Content moderation on social media platforms involves monitoring user-generated content to enforce community guidelines and legal standards, ensuring safe and respectful online environments. While all platforms utilize a mix of automated algorithms and human reviewers, differences arise in the extent of censorship, transparency, and appeal processes implemented. Facebook emphasizes AI-driven detection for hate speech, Twitter prioritizes real-time removal of misinformation, and YouTube focuses heavily on copyright enforcement, highlighting varied moderation strategies tailored to platform-specific content challenges.

Approach to Hate Speech and Misinformation

Social media platforms implement advanced machine learning algorithms and AI-driven content moderation to identify and remove hate speech and misinformation swiftly. Policies are constantly updated to address evolving threats, leveraging community reporting and fact-checking partnerships to enhance detection accuracy. Transparency reports and user education initiatives further strengthen efforts to create safer online environments.

User Privacy and Data Handling Practices

User privacy on social media platforms is safeguarded through strict data handling practices including end-to-end encryption, anonymization of personal information, and compliance with GDPR and CCPA regulations to protect sensitive user data. Advanced algorithms monitor suspicious activities and unauthorized data access to minimize risks of data breaches, ensuring Your information remains secure. Transparent privacy policies and user consent mechanisms empower You to control what data is collected and how it is shared, reinforcing trust between users and social media companies.

Appeals and Account Recovery Processes

Social media platforms employ various appeals processes enabling users to contest content removals or suspensions, ensuring fairness and compliance with community guidelines. Account recovery mechanisms often involve multi-factor authentication, identity verification, and support ticket systems to securely restore access after hacking, forgotten passwords, or policy violations. Efficient appeals and recovery protocols enhance user trust, platform integrity, and minimize downtime caused by account access issues.

Regional Influences and Legal Compliance

Regional influences significantly shape social media trends, with cultural norms, language, and local events affecting content engagement and platform popularity. Legal compliance requires strict adherence to regional regulations such as GDPR in Europe or CCPA in California, impacting data privacy and user consent protocols. Ensuring your social media strategy respects these localized legal frameworks protects your brand from penalties and fosters trust with your audience.

Impact on User Experience and Freedom of Expression

Social media platforms significantly shape user experience by personalizing content through algorithms that influence engagement and information exposure. These platforms provide a vital space for freedom of expression, enabling diverse voices to share opinions and foster public discourse. However, the balance between content moderation and protecting free speech remains a critical challenge impacting user trust and platform dynamics.

socmedb.com

socmedb.com