Photo illustration: Facebook Tag Review vs Content Moderation

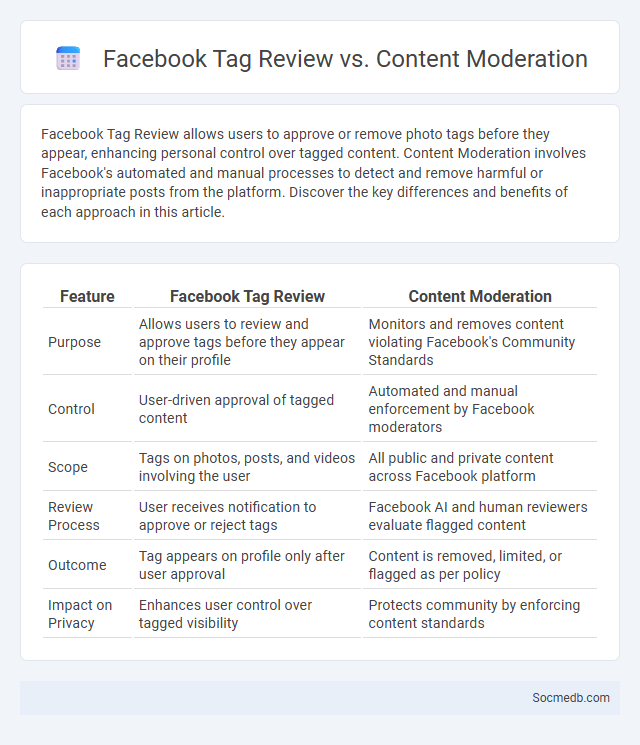

Facebook Tag Review allows users to approve or remove photo tags before they appear, enhancing personal control over tagged content. Content Moderation involves Facebook's automated and manual processes to detect and remove harmful or inappropriate posts from the platform. Discover the key differences and benefits of each approach in this article.

Table of Comparison

| Feature | Facebook Tag Review | Content Moderation |

|---|---|---|

| Purpose | Allows users to review and approve tags before they appear on their profile | Monitors and removes content violating Facebook's Community Standards |

| Control | User-driven approval of tagged content | Automated and manual enforcement by Facebook moderators |

| Scope | Tags on photos, posts, and videos involving the user | All public and private content across Facebook platform |

| Review Process | User receives notification to approve or reject tags | Facebook AI and human reviewers evaluate flagged content |

| Outcome | Tag appears on profile only after user approval | Content is removed, limited, or flagged as per policy |

| Impact on Privacy | Enhances user control over tagged visibility | Protects community by enforcing content standards |

Introduction to Facebook Tag Review and Content Moderation

Facebook Tag Review allows you to control which tags appear on your timeline by reviewing posts where you are tagged before they become visible to your friends. Content Moderation on Facebook involves filtering and managing user-generated content to ensure it adheres to community standards, removing harmful or inappropriate material. Together, these tools empower you to maintain a safe and personalized social media experience.

Understanding Tag Review on Facebook

Facebook's Tag Review feature allows users to control tags added by others to their posts and photos, enhancing privacy and content management. When enabled, users receive notifications to approve or reject tags before they appear on their timelines, ensuring better regulation of personal information. This tool is crucial for maintaining a curated online presence and preventing unwanted associations on social media profiles.

What is Content Moderation on Facebook?

Content moderation on Facebook involves reviewing and managing user-generated posts, comments, images, and videos to ensure they comply with the platform's Community Standards. Facebook uses a combination of artificial intelligence algorithms and human moderators to detect and remove harmful content such as hate speech, misinformation, violence, and spam. This process helps maintain a safe and respectful environment for over 3 billion active monthly users worldwide.

Tag Review vs. Content Moderation: Key Differences

Tag review involves analyzing user-generated tags to ensure relevance and appropriateness, enhancing content discoverability on social media platforms. Content moderation focuses on evaluating posts, comments, and images for compliance with community guidelines, removing harmful or inappropriate material to maintain a safe environment. Your social media strategy benefits from understanding these key differences to effectively manage user interactions and platform safety.

The Importance of Tag Review for User Privacy

Tag review is crucial for user privacy on social media platforms, as it allows individuals to control which photos or posts they are associated with, preventing unauthorized exposure. Platforms like Facebook and Instagram offer tag review settings that notify users before tags appear publicly, minimizing privacy risks and enhancing personal content management. Enabling tag review not only protects users from unwanted tagging but also strengthens overall data security by reducing the likelihood of sensitive information being shared without consent.

Content Moderation: Ensuring Community Standards

Content moderation on social media platforms enforces community standards by filtering harmful, offensive, and misleading content to maintain a safe and respectful environment. Advanced AI algorithms combined with human reviewers assess posts, comments, and multimedia to detect violations such as hate speech, misinformation, and harassment. Effective content moderation enhances user trust, engagement, and complies with legal regulations governing online speech and platform responsibility.

Pros and Cons of Facebook's Tag Review System

Facebook's tag review system enhances user control by allowing individuals to approve or reject photo tags before they appear on their profile, improving privacy and reducing unwanted exposure. The system's proactive nature helps prevent potential embarrassment or identity misuse but can sometimes cause delays in notifications or missed tags, affecting social interaction and content visibility. While the feature supports user empowerment and content moderation, it may lead to inconvenience due to manual approvals, highlighting a trade-off between privacy and seamless social engagement.

Challenges Faced in Content Moderation

Content moderation on social media faces challenges such as identifying harmful or misleading content while respecting free speech and privacy rights. Automated systems often struggle with context and cultural nuances, leading to inconsistent enforcement and potential biases. Your role in reporting inappropriate content helps platforms maintain safer online communities despite these complexities.

How Tag Review and Content Moderation Work Together

Tag review and content moderation operate synergistically to enhance social media platform safety by categorizing and evaluating user-generated content for compliance with community standards. Automated systems assign tags based on content characteristics, enabling targeted moderation that swiftly identifies harmful or inappropriate material. Human moderators then review tagged content to ensure nuanced judgment, reducing false positives and maintaining a positive user experience.

Future Trends in Facebook Tag Review and Moderation

Facebook's future trends in tag review and moderation leverage advanced AI algorithms to identify and filter inappropriate or misleading content more accurately. Machine learning models continuously analyze user interactions and context, enhancing the precision of tag suggestions and reducing manual moderation efforts. You can expect increased automation combined with real-time monitoring to foster safer and more transparent social media environments.

socmedb.com

socmedb.com