Photo illustration: Facebook Content Removal vs Content Demotion

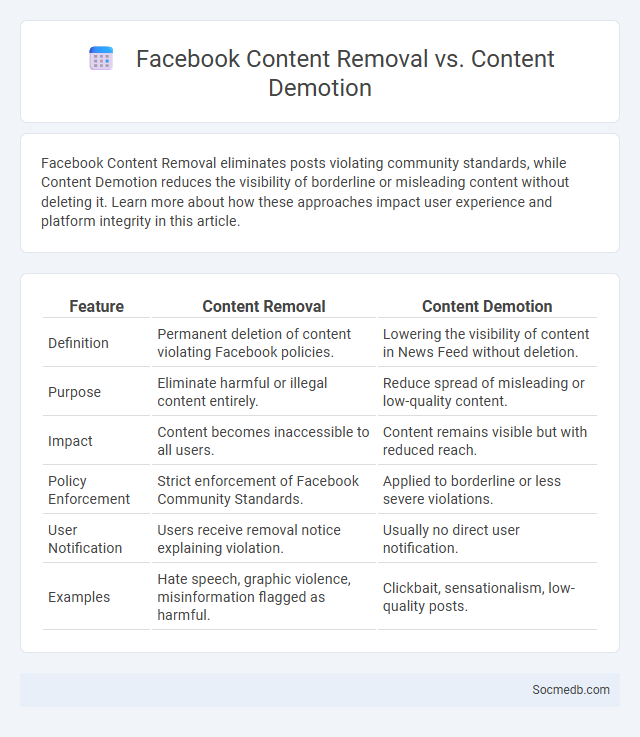

Facebook Content Removal eliminates posts violating community standards, while Content Demotion reduces the visibility of borderline or misleading content without deleting it. Learn more about how these approaches impact user experience and platform integrity in this article.

Table of Comparison

| Feature | Content Removal | Content Demotion |

|---|---|---|

| Definition | Permanent deletion of content violating Facebook policies. | Lowering the visibility of content in News Feed without deletion. |

| Purpose | Eliminate harmful or illegal content entirely. | Reduce spread of misleading or low-quality content. |

| Impact | Content becomes inaccessible to all users. | Content remains visible but with reduced reach. |

| Policy Enforcement | Strict enforcement of Facebook Community Standards. | Applied to borderline or less severe violations. |

| User Notification | Users receive removal notice explaining violation. | Usually no direct user notification. |

| Examples | Hate speech, graphic violence, misinformation flagged as harmful. | Clickbait, sensationalism, low-quality posts. |

Understanding Facebook Content Policies

Facebook content policies prioritize user safety by regulating hate speech, misinformation, and graphic content to create a respectful environment. These guidelines emphasize transparency, requiring clear labeling of political ads and restrictions on deceptive practices. Understanding Facebook's community standards helps users and businesses align their posts with platform rules, reducing the risk of content removal or account penalties.

What is Content Removal on Facebook?

Content Removal on Facebook refers to the process of deleting posts, comments, photos, or videos that violate community standards or user requests. You can request content removal if it infringes on your rights or contains harmful, misleading, or inappropriate material. Facebook's automated systems and human moderators work together to review and remove such content to maintain a safe online environment.

Exploring Facebook’s Content Demotion Practices

Facebook employs content demotion practices to reduce the visibility of posts that violate community standards or spread misinformation. Algorithms assess factors such as user reports, fact-checkers' input, and engagement patterns to identify content warranting demotion. These measures aim to promote credible information while limiting harmful or deceptive material on the platform.

Defining Content Moderation on Facebook

Content moderation on Facebook involves the systematic review and management of user-generated posts, comments, and media to ensure compliance with community standards and legal regulations. This process utilizes advanced algorithms combined with human oversight to identify and remove harmful, misleading, or inappropriate content efficiently. Your experience on the platform is safeguarded by these measures, promoting a safer and more trustworthy online environment.

Key Differences: Removal vs Demotion vs Moderation

Removal involves completely deleting content from a platform due to violations of community guidelines or legal requirements, effectively making it inaccessible to all users. Demotion reduces the visibility or reach of specific posts without deleting them, often using algorithms to limit exposure based on criteria like misinformation or low-quality content. Moderation encompasses both proactive and reactive processes, including content review, flagging, and user reporting to enforce platform rules, balancing freedom of expression with safety and compliance.

Reasons for Facebook Content Removal

Facebook removes content primarily to enforce its Community Standards, which prohibit hate speech, violence, misinformation, and harassment to maintain a safe user environment. Content promoting illegal activities, graphic violence, or adult nudity is also subject to removal to comply with legal requirements and protect vulnerable audiences. The platform uses a combination of artificial intelligence and user reports to identify and swiftly take down violating posts, ensuring compliance with global regulations and user policies.

When Does Facebook Choose Content Demotion?

Facebook chooses content demotion when posts violate community standards, promote misinformation, or contain low-quality and spammy material. The platform also reduces the reach of content that generates user complaints or exhibits engagement bait tactics. Demotion algorithms prioritize authentic, relevant content to enhance user experience and reduce the spread of harmful or misleading information.

The Role of Human Moderators and AI

Human moderators play a crucial role in maintaining the integrity of social media platforms by contextualizing content and making nuanced decisions that AI algorithms may overlook. AI enhances moderation efficiency through automated detection of harmful content such as hate speech, misinformation, and spam by analyzing vast datasets with machine learning models. Combining human judgment with AI's speed and scalability optimizes content review processes, ensuring safer and more reliable online communities.

Impacts of Content Actions on Users and Creators

Content actions on social media, such as likes, shares, and comments, significantly influence user engagement and creator visibility through algorithmic prioritization. Positive interactions boost creators' reach and revenue opportunities by enhancing content discoverability across platforms like Instagram, TikTok, and YouTube. Negative feedback or content removal can lead to decreased user trust and reduced monetization potential, impacting overall community dynamics and platform sustainability.

How to Appeal Facebook’s Content Decisions

To appeal Facebook's content decisions effectively, you need to thoroughly review the Community Standards violation notification and gather evidence supporting your case. Access the "Support Inbox" on your Facebook account, locate the specific decision, and use the provided appeal button to submit a clear, concise explanation addressing the alleged violation. Your appeal should highlight any misunderstandings or context that demonstrates compliance with Facebook's policies to increase the likelihood of a successful reversal.

socmedb.com

socmedb.com