Photo illustration: Facebook Manual Review vs Automated Review

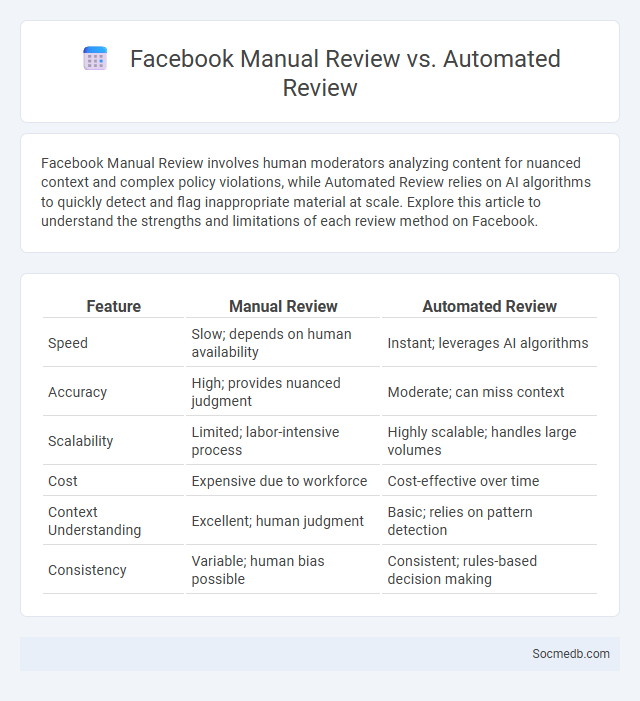

Facebook Manual Review involves human moderators analyzing content for nuanced context and complex policy violations, while Automated Review relies on AI algorithms to quickly detect and flag inappropriate material at scale. Explore this article to understand the strengths and limitations of each review method on Facebook.

Table of Comparison

| Feature | Manual Review | Automated Review |

|---|---|---|

| Speed | Slow; depends on human availability | Instant; leverages AI algorithms |

| Accuracy | High; provides nuanced judgment | Moderate; can miss context |

| Scalability | Limited; labor-intensive process | Highly scalable; handles large volumes |

| Cost | Expensive due to workforce | Cost-effective over time |

| Context Understanding | Excellent; human judgment | Basic; relies on pattern detection |

| Consistency | Variable; human bias possible | Consistent; rules-based decision making |

Introduction to Facebook Review Systems

Facebook's review system enables users to share their experiences and rate businesses, enhancing transparency and trust. Your feedback influences local search rankings and helps businesses improve their services based on real customer insights. Integrating authentic reviews into your social media strategy can boost engagement and brand credibility on Facebook.

What is Manual Review on Facebook?

Manual review on Facebook involves human moderators evaluating reported content to ensure it complies with community standards and policies. This process helps identify false positives or nuanced violations that automated systems may miss, enhancing the accuracy of content moderation. Human reviewers analyze context, intent, and specific details before deciding to remove, restrict, or leave the content live.

Understanding Automated Review Processes

Automated review processes on social media utilize artificial intelligence algorithms to analyze content for compliance with community guidelines and policies. These systems prioritize detecting hate speech, misinformation, and explicit material by scanning text, images, and videos at scale. Continuous advancements in machine learning enhance accuracy, reduce false positives, and enable faster decision-making in content moderation.

Content Moderation: The Broader Framework

Content moderation on social media platforms involves a comprehensive framework that includes automated algorithms, human reviewers, and user reporting mechanisms to detect and manage harmful or inappropriate content. Effective moderation safeguards community standards, prevents misinformation, and ensures a safe online environment by balancing freedom of expression with regulatory compliance. By understanding this framework, you can better navigate platform policies and contribute to a healthier digital space.

Key Differences Between Manual and Automated Review

Manual reviews on social media rely on human judgment, offering nuanced understanding and contextual evaluation of user-generated content, while automated reviews use AI algorithms to quickly analyze large volumes of data based on pre-set criteria. You benefit from manual reviews when accuracy and sensitivity to cultural or linguistic subtleties are crucial; automated reviews excel in speed and consistency, helping to handle real-time moderation of comments, posts, and messages. Combining both methods enhances content quality control, ensuring safety and compliance with platform policies while optimizing operational efficiency.

Advantages of Manual Review

Manual review of social media content ensures higher accuracy in detecting context-specific nuances, reducing false positives and negatives often missed by automated systems. It enhances compliance with platform policies by allowing human judgment to interpret sensitive or ambiguous content more effectively. Manual review also supports the identification of emerging trends and malicious behavior, improving the overall quality and safety of the social media environment.

Advantages of Automated Review

Automated review systems on social media enhance efficiency by quickly analyzing large volumes of content, ensuring faster moderation and improved user experience. Your platforms benefit from consistent enforcement of community guidelines, reducing bias and human error in content evaluation. These tools also enable real-time feedback and scalable monitoring, supporting safer and more engaging online environments.

Challenges in Facebook Content Moderation

Facebook content moderation faces challenges due to the sheer volume of daily posts, requiring advanced AI algorithms alongside human reviewers to identify harmful content effectively. The platform struggles with balancing free expression and preventing misinformation, hate speech, and graphic material, which often leads to inconsistent enforcement and user dissatisfaction. To protect Your online experience, Facebook continuously updates its policies and invests in technologies, yet the dynamic nature of content and cultural differences complicate moderation efforts.

Choosing the Right Review Method

Choosing the right review method for social media content ensures accurate sentiment analysis and engagement measurement. You should prioritize methods like user-generated reviews or expert evaluations depending on your platform's goals and audience behavior. Optimizing review strategies affects brand reputation and drives informed marketing decisions.

Future Trends in Facebook Content Moderation

Facebook content moderation is evolving with advancements in artificial intelligence and machine learning, enabling more accurate detection of harmful content. Your experience will benefit from enhanced real-time filtering of misinformation, hate speech, and graphic material across the platform. Future trends also emphasize transparency and user empowerment through improved reporting tools and clearer moderation policies.

socmedb.com

socmedb.com