Photo illustration: TikTok censorship vs YouTube censorship

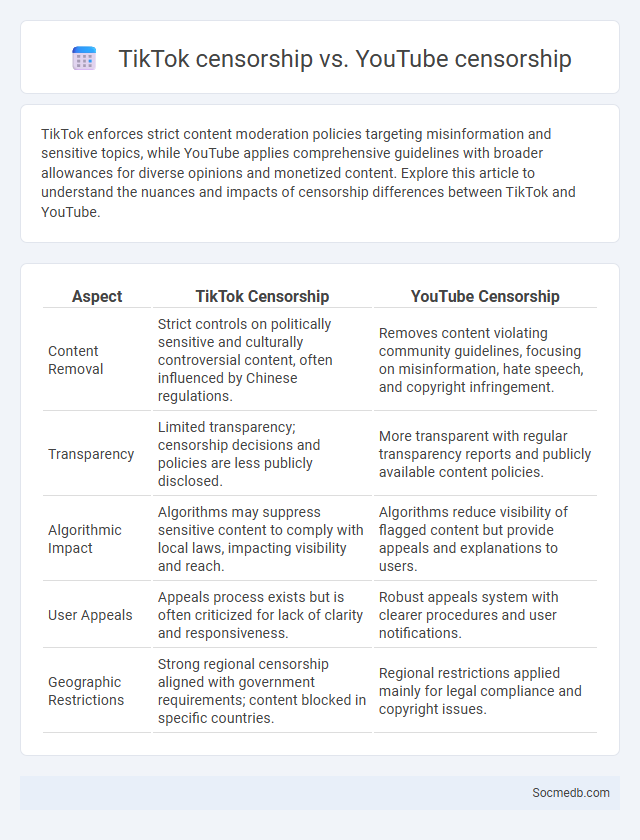

TikTok enforces strict content moderation policies targeting misinformation and sensitive topics, while YouTube applies comprehensive guidelines with broader allowances for diverse opinions and monetized content. Explore this article to understand the nuances and impacts of censorship differences between TikTok and YouTube.

Table of Comparison

| Aspect | TikTok Censorship | YouTube Censorship |

|---|---|---|

| Content Removal | Strict controls on politically sensitive and culturally controversial content, often influenced by Chinese regulations. | Removes content violating community guidelines, focusing on misinformation, hate speech, and copyright infringement. |

| Transparency | Limited transparency; censorship decisions and policies are less publicly disclosed. | More transparent with regular transparency reports and publicly available content policies. |

| Algorithmic Impact | Algorithms may suppress sensitive content to comply with local laws, impacting visibility and reach. | Algorithms reduce visibility of flagged content but provide appeals and explanations to users. |

| User Appeals | Appeals process exists but is often criticized for lack of clarity and responsiveness. | Robust appeals system with clearer procedures and user notifications. |

| Geographic Restrictions | Strong regional censorship aligned with government requirements; content blocked in specific countries. | Regional restrictions applied mainly for legal compliance and copyright issues. |

Understanding Platform Policies: TikTok vs YouTube

Understanding platform policies is crucial for effective content creation and compliance on TikTok and YouTube. TikTok emphasizes short-form, dynamic video content with strict community guidelines focusing on copyright and user safety, while YouTube supports longer videos with detailed monetization rules and copyright policies enforced through Content ID. Both platforms require creators to stay updated on policy changes to avoid account penalties and maximize audience engagement.

Definitions of Censorship Across Social Media

Social media censorship involves the restriction or removal of content deemed inappropriate, harmful, or against platform policies, varying widely between different platforms like Facebook, Twitter, and Instagram. Definitions of censorship on social media often encompass content moderation practices that balance free expression with the prevention of misinformation, hate speech, and illegal activities. Understanding these definitions helps you navigate platform rules and protect your digital presence effectively.

What Constitutes Banned Content?

Banned content on social media includes material that violates platform policies such as hate speech, harassment, explicit violence, and illegal activities. Your posts containing misinformation, graphic content, or promotion of self-harm may also be removed to ensure user safety and compliance with community standards. Understanding these restrictions helps you create responsible content while avoiding penalties or account suspension.

Content Moderation Algorithms: How They Work

Content moderation algorithms analyze user-generated posts by leveraging machine learning models trained on vast datasets to identify and flag inappropriate or harmful content such as hate speech, spam, and violence. These algorithms employ natural language processing (NLP) and image recognition techniques to assess textual and visual data in real time, ensuring compliance with platform policies. Continuous updates and human oversight enhance their accuracy by adapting to emerging trends and minimizing false positives.

Case Studies: Viral Censorship Incidents

Case studies of viral censorship incidents reveal how social media platforms handle content moderation during high-profile controversies, impacting public discourse and user trust. Notable examples include the suppression of political protests, misinformation during pandemics, and misinformation related to elections, highlighting the balance between free expression and platform policies. You can learn effective strategies for navigating, responding to, and understanding the implications of these censorship actions on your social media presence.

Impact of Regional Laws on Content Bans

Regional laws significantly shape social media content policies by dictating what material can be published, restricted, or removed within specific geographic areas. You must navigate varying regulations, such as the European Union's Digital Services Act or China's Cybersecurity Law, each enforcing different standards for content censorship and platform responsibility. These legal frameworks influence how social media platforms moderate user-generated content, affecting freedom of expression, data privacy, and the availability of information across regions.

Freedom of Expression: Differences Between Platforms

Freedom of expression varies significantly across social media platforms due to differing content policies and community guidelines. Platforms like Twitter prioritize real-time public discourse but enforce rules against hate speech and misinformation, while Facebook employs complex algorithms and moderation to balance openness with safety. Understanding these distinctions empowers your ability to navigate and express yourself effectively within each digital environment.

User Appeals and Content Restoration Processes

User appeals in social media platforms enable individuals to challenge content removal or account suspensions, ensuring fairness and transparency in enforcement. Content restoration processes typically involve a systematic review by moderators or automated systems to verify whether the original decision was accurate, balancing community guidelines with user rights. Effective appeal mechanisms enhance trust, promote user engagement, and help maintain platform integrity by correcting erroneous content restrictions.

The Role of Misinformation and Political Content

Misinformation on social media platforms significantly influences public opinion by spreading false or misleading political content rapidly among users. Algorithms designed to maximize engagement often amplify polarizing political posts, intensifying echo chambers and reducing exposure to balanced viewpoints. This dynamic undermines democratic processes by distorting information accuracy and shaping voter behavior based on deceptive narratives.

Looking Ahead: The Future of Social Platform Censorship

Emerging technologies and evolving regulatory frameworks are shaping the future of social platform censorship, emphasizing transparency and accountability. Artificial intelligence-driven content moderation systems will enhance accuracy but raise ethical concerns over free speech and bias. Increased collaboration between governments, platforms, and users is critical to balancing safety, privacy, and expression in digital spaces.

socmedb.com

socmedb.com