Photo illustration: Community Notes vs Moderation

Community Notes empowers users to collaboratively add context and reduce misinformation on social media platforms, enhancing transparency and trust. Explore this article to understand how Community Notes compare to traditional moderation methods in improving online discourse.

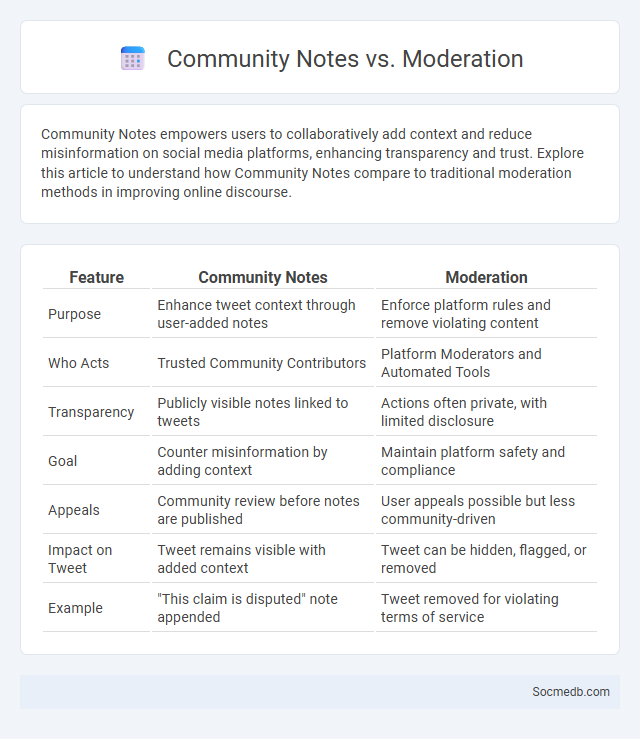

Table of Comparison

| Feature | Community Notes | Moderation |

|---|---|---|

| Purpose | Enhance tweet context through user-added notes | Enforce platform rules and remove violating content |

| Who Acts | Trusted Community Contributors | Platform Moderators and Automated Tools |

| Transparency | Publicly visible notes linked to tweets | Actions often private, with limited disclosure |

| Goal | Counter misinformation by adding context | Maintain platform safety and compliance |

| Appeals | Community review before notes are published | User appeals possible but less community-driven |

| Impact on Tweet | Tweet remains visible with added context | Tweet can be hidden, flagged, or removed |

| Example | "This claim is disputed" note appended | Tweet removed for violating terms of service |

Introduction to Community Notes and Moderation

Community Notes empowers users to collaboratively fact-check and add context to social media posts, enhancing content reliability and reducing misinformation. Moderation tools enable you to manage and curate interactions, maintaining a respectful and engaging online environment. Utilizing these features promotes transparency and accountability within social networks, fostering healthier digital communities.

Defining Community Notes: Purpose and Function

Community Notes serve as a collaborative fact-checking tool on social media platforms designed to address misinformation by allowing users to add context and corrections to posts. This system enhances content credibility by leveraging diverse perspectives, reducing the spread of false information through crowd-sourced moderation. By promoting transparent dialogue, Community Notes empower users to make informed decisions and improve overall platform trustworthiness.

Understanding Moderation: Roles and Responsibilities

Social media moderation involves monitoring content to enforce community guidelines, ensuring platforms remain safe and respectful for all users. Moderators evaluate posts, comments, and multimedia to identify harmful or inappropriate material, balancing free expression with the need to prevent harassment, misinformation, and hate speech. This role requires a deep understanding of platform policies, cultural sensitivities, and legal regulations to maintain digital trust and user engagement.

Key Differences: Community Notes vs Moderation

Community Notes empowers users to add context and correct misinformation collaboratively, enhancing transparency and collective accountability in social media platforms. Moderation involves platform-appointed moderators or algorithms enforcing rules by removing harmful or inappropriate content to maintain safe and respectful online environments. While Community Notes fosters user-driven fact-checking through public annotations, moderation emphasizes regulatory actions to control and filter content based on community guidelines.

Advantages of Community Notes in Social Platforms

Community Notes enhance social platforms by providing real-time fact-checking, reducing misinformation through collaborative user input. These notes increase transparency and trust, empowering you to discern credible information quickly. By leveraging diverse perspectives, Community Notes foster informed discussions and a safer online environment.

Challenges and Limitations of Moderation

Social media moderation faces significant challenges in balancing free expression with the removal of harmful content such as misinformation, hate speech, and harassment. Algorithmic biases and the scale of user-generated content create limitations in accurately identifying and addressing violations without infringing on your right to dialogue. Ensuring transparency, cultural sensitivity, and timely responses remains a complex task for platforms striving to maintain safe and inclusive online communities.

How Community Notes Enhance Moderation

Community Notes enhance social media moderation by leveraging collective user insights to identify and correct misinformation rapidly and accurately. This collaborative fact-checking system improves content credibility and transparency, reducing the spread of false information across platforms. By empowering diverse voices in content review, Community Notes promote a more trustworthy and balanced digital environment.

Case Studies: Community Notes and Moderation in Action

Community Notes exemplify effective social media moderation by leveraging user contributions to identify and correct misinformation swiftly. Case studies reveal that this collaborative approach increases transparency and trust, reducing the spread of false content across platforms. Analysis shows that community-driven moderation enhances engagement while maintaining content accuracy in dynamic online environments.

Impact on User Trust and Platform Integrity

Privacy breaches and misinformation on social media platforms significantly erode user trust, leading to decreased engagement and platform loyalty. The proliferation of fake accounts and manipulated content undermines platform integrity, forcing companies to implement stringent verification and content moderation policies. Transparency in data handling and proactive measures against harmful behavior are essential to restore confidence and maintain a trustworthy digital environment.

Future Trends: The Evolution of Community-Led Moderation

Community-led moderation in social media is evolving through AI-powered tools that enhance real-time content filtering and foster user trust. Emerging decentralized platforms leverage blockchain to increase transparency and empower users with control over moderation policies. These innovations are expected to reduce misinformation, promote healthier online interactions, and create more resilient digital communities.

socmedb.com

socmedb.com