Photo illustration: YouTube Demonetization vs Deplatforming

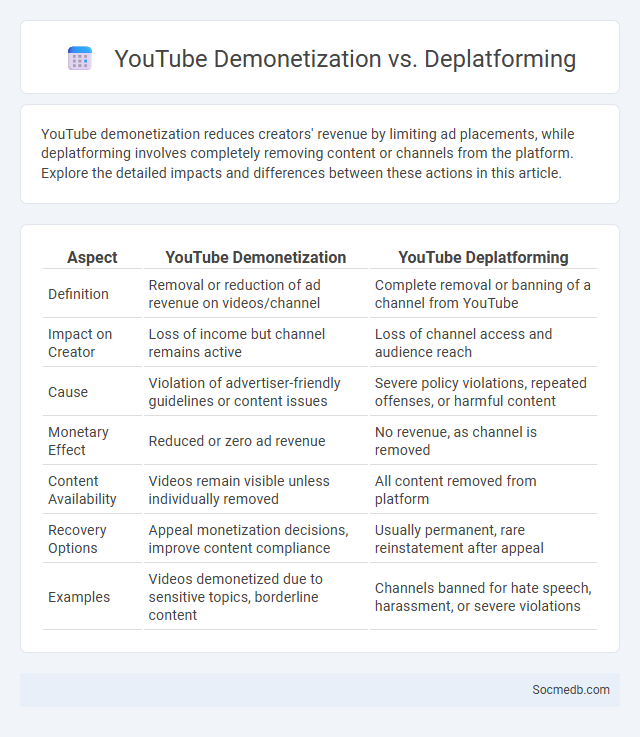

YouTube demonetization reduces creators' revenue by limiting ad placements, while deplatforming involves completely removing content or channels from the platform. Explore the detailed impacts and differences between these actions in this article.

Table of Comparison

| Aspect | YouTube Demonetization | YouTube Deplatforming |

|---|---|---|

| Definition | Removal or reduction of ad revenue on videos/channel | Complete removal or banning of a channel from YouTube |

| Impact on Creator | Loss of income but channel remains active | Loss of channel access and audience reach |

| Cause | Violation of advertiser-friendly guidelines or content issues | Severe policy violations, repeated offenses, or harmful content |

| Monetary Effect | Reduced or zero ad revenue | No revenue, as channel is removed |

| Content Availability | Videos remain visible unless individually removed | All content removed from platform |

| Recovery Options | Appeal monetization decisions, improve content compliance | Usually permanent, rare reinstatement after appeal |

| Examples | Videos demonetized due to sensitive topics, borderline content | Channels banned for hate speech, harassment, or severe violations |

Understanding YouTube Demonetization: What It Means for Creators

YouTube demonetization refers to the process where videos or channels are deemed ineligible for ad revenue due to violations of YouTube's monetization policies or advertiser-friendly guidelines. This impacts creators' income streams by limiting ads displayed on their content, often prompting a need to diversify revenue sources such as sponsorships or merchandise sales. Understanding specific causes like copyright infringement, community guideline strikes, or controversial content is crucial for creators to maintain monetization and optimize video monetization potential.

Deplatforming Explained: Removal from YouTube and Its Consequences

Deplatforming on YouTube involves the removal of a user's account or content due to policy violations, often related to hate speech, misinformation, or copyright infringement. The consequences of such removal include loss of audience reach, revenue streams, and digital influence, significantly impacting creators' ability to engage and monetize. This enforcement mechanism serves to maintain platform integrity but can also provoke debates over censorship and free speech boundaries.

The Growing Issue of Demonization on Social Platforms

The growing issue of demonization on social media platforms has intensified, with users frequently subjected to harmful stereotypes and unjust character attacks. Algorithms often amplify divisive content, increasing the visibility of demonizing posts, which contributes to a toxic online environment. Major platforms such as Facebook, Twitter, and Instagram face increasing pressure to implement stricter policies to combat hate speech and promote constructive dialogue.

Key Differences: Demonetization vs Deplatforming vs Demonization

Demonetization refers to the removal or reduction of a user's ability to earn revenue on social media platforms, often by disabling ads or restricting monetization features. Deplatforming involves the complete removal or suspension of a user's account, effectively banning them from the platform to prevent further content posting or interaction. Demonization entails portraying an individual or group negatively through content or discourse, aiming to damage their reputation without necessarily restricting their platform access or monetization capabilities.

Common Reasons YouTube Channels Face Demonetization

YouTube channels commonly face demonetization due to violations of community guidelines, including inappropriate content, hate speech, and copyright infringement. Inconsistent uploading schedules and low audience engagement metrics like watch time and click-through rate also impact monetization eligibility. Channels failing to comply with YouTube's advertiser-friendly content policies experience reduced revenue opportunities and potential suspension from the YouTube Partner Program.

Case Studies: High-Profile Instances of Deplatforming

High-profile instances of deplatforming on social media highlight the impact on public discourse and user behavior, such as the permanent suspension of former U.S. President Donald Trump's Twitter account in 2021 following the Capitol riot. These case studies reveal platform policies regarding hate speech, misinformation, and incitement to violence, with companies like Facebook, Twitter, and YouTube enforcing strict content moderation standards. The repercussions extend to debates on free speech, censorship, and the responsibility of social media giants in regulating global communication.

The Impact of Demonization on Creator Reputation and Reach

Demonization on social media platforms significantly harms creator reputation by fostering negative perceptions and reducing audience trust. This phenomenon often leads to decreased content visibility and engagement, directly impacting your reach and growth potential. Understanding platform moderation policies and actively managing community interactions are crucial strategies to mitigate reputational risks and sustain long-term influence.

YouTube’s Policies: How They Trigger Each Action

YouTube's policies strictly regulate content by triggering specific actions such as age restrictions, content removal, or account suspension based on violations including hate speech, misinformation, and copyright infringement. Your understanding of community guidelines ensures compliance, preventing penalties and maintaining channel integrity. Enforcement mechanisms employ automated systems and human review to uphold platform safety and advertiser trust.

Strategies for Creators to Avoid Demonetization and Deplatforming

Creators must adhere to platform guidelines by producing original, community-friendly content while avoiding violations such as hate speech, misinformation, or copyright infringement. Employing consistent engagement strategies, like responding to comments and using platform-specific features, helps maintain positive algorithmic favor and avoid penalties. Monitoring analytics and quickly addressing any strikes or warnings ensures sustained monetization and long-term presence across YouTube, TikTok, and Instagram.

The Future of Content Moderation: Trends and Predictions

Emerging technologies like artificial intelligence and machine learning are transforming content moderation on social media by enabling faster, more accurate detection of harmful or inappropriate content. Platforms are increasingly adopting proactive measures, such as automated filtering combined with human oversight, to balance user safety and freedom of expression. Your experience will improve as these trends drive smarter moderation policies and reduce exposure to offensive or misleading information.

socmedb.com

socmedb.com