Photo illustration: YouTube Flagging vs Manual Review

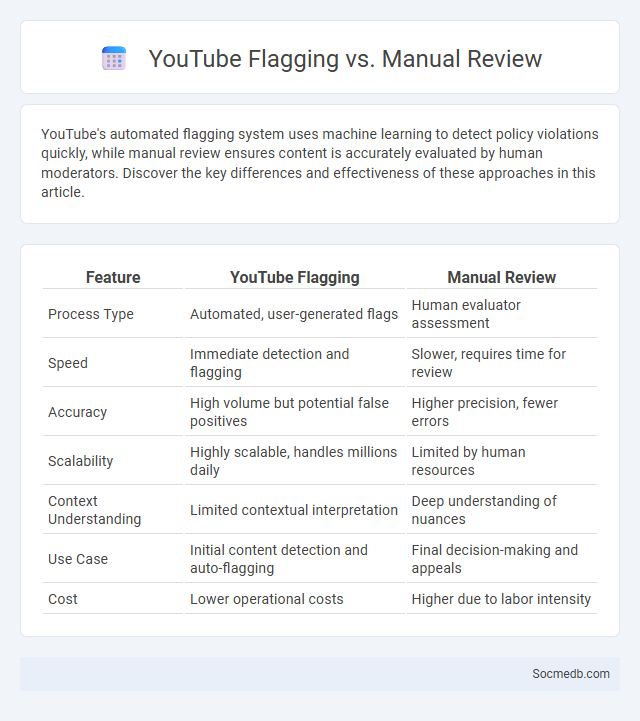

YouTube's automated flagging system uses machine learning to detect policy violations quickly, while manual review ensures content is accurately evaluated by human moderators. Discover the key differences and effectiveness of these approaches in this article.

Table of Comparison

| Feature | YouTube Flagging | Manual Review |

|---|---|---|

| Process Type | Automated, user-generated flags | Human evaluator assessment |

| Speed | Immediate detection and flagging | Slower, requires time for review |

| Accuracy | High volume but potential false positives | Higher precision, fewer errors |

| Scalability | Highly scalable, handles millions daily | Limited by human resources |

| Context Understanding | Limited contextual interpretation | Deep understanding of nuances |

| Use Case | Initial content detection and auto-flagging | Final decision-making and appeals |

| Cost | Lower operational costs | Higher due to labor intensity |

Understanding YouTube’s Content Moderation System

YouTube's content moderation system employs advanced algorithms and human reviewers to identify and remove harmful or inappropriate videos. Understanding how automated detection, community guidelines, and appeals processes work helps you navigate content policies effectively. Staying informed about updates in YouTube's moderation practices ensures your channel remains compliant and visible.

What is YouTube Flagging?

YouTube flagging is the process where users report videos that violate community guidelines, such as inappropriate content, spam, or copyright infringement. This system helps maintain content quality and ensures compliance with YouTube policies by alerting moderators for review. You can protect your channel by understanding flagging criteria and addressing any flagged content promptly to avoid penalties.

How Does Manual Review Work on YouTube?

Manual review on YouTube involves human moderators who assess flagged content to ensure it complies with community guidelines, focusing on context, intent, and nuances that automated systems may miss. Reviewers evaluate videos for violations such as hate speech, misinformation, or inappropriate content, determining whether to remove, restrict, or leave the video accessible. This process enhances content accuracy and fairness, supporting YouTube's efforts to maintain a safe and trustworthy platform.

Key Differences: Flagging vs. Manual Review

Flagging on social media platforms relies on users identifying and reporting inappropriate content, triggering automated systems for initial assessment. Manual review involves trained moderators who evaluate flagged content for context and policy compliance, ensuring nuanced decision-making beyond algorithmic detection. When you encounter questionable posts, understanding these processes helps you navigate online safety with awareness of both community involvement and professional oversight.

Pros and Cons of Automated Flagging

Automated flagging in social media platforms enables rapid identification and removal of inappropriate or harmful content, enhancing online safety and compliance with community standards. However, this technology can produce false positives, inadvertently censoring legitimate speech and causing frustration among users. Balancing accuracy and sensitivity remains a critical challenge to maintaining freedom of expression while ensuring a respectful digital environment.

Advantages and Limitations of Manual Review

Manual review of social media content ensures higher accuracy in identifying nuanced context, hate speech, and misinformation compared to automated systems. Your challenges include scalability issues, slower processing times, and potential reviewer biases that impact consistency. Balancing human judgment with technology is essential to maintain content quality and user safety across platforms.

Community Involvement in Flagging Content

Community involvement in flagging content plays a crucial role in maintaining the quality and safety of social media platforms. By empowering users to report inappropriate or harmful posts, these platforms rely on collective vigilance to quickly identify and remove violations of guidelines. Engaging in this process helps Your online environment stay secure and respectful for all participants.

Impact of Flagging and Manual Review on Creators

Flagging and manual review processes significantly influence content creators by shaping visibility and audience engagement on social media platforms. The removal or restriction of flagged content can lead to reduced reach, impacting creators' revenue and brand partnerships. Accurate and transparent review mechanisms are essential to maintaining creator trust and fostering a fair digital ecosystem.

YouTube’s Policy Enforcement: Automation vs. Human Oversight

YouTube's policy enforcement relies on a hybrid approach where automated systems detect and flag potentially harmful content, while human moderators review flagged materials to ensure accuracy and context sensitivity. This balance helps maintain platform safety by quickly addressing violations without compromising fair judgment. Your content can be protected or moderated more effectively when both AI algorithms and human oversight work together to uphold community guidelines.

Future Trends in YouTube Content Moderation

YouTube content moderation is increasingly leveraging artificial intelligence and machine learning algorithms to detect harmful content with higher accuracy and speed. Emerging trends include the integration of real-time video analysis and natural language processing to better understand context and reduce false positives. User empowerment through enhanced reporting tools and transparency reports is also shaping the future landscape of platform safety and community trust.

socmedb.com

socmedb.com