Photo illustration: Facebook User Reports vs Moderator Decisions

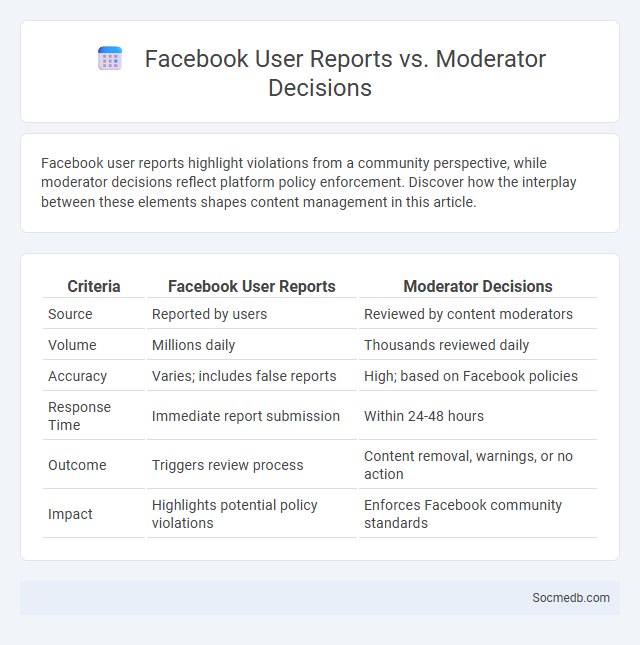

Facebook user reports highlight violations from a community perspective, while moderator decisions reflect platform policy enforcement. Discover how the interplay between these elements shapes content management in this article.

Table of Comparison

| Criteria | Facebook User Reports | Moderator Decisions |

|---|---|---|

| Source | Reported by users | Reviewed by content moderators |

| Volume | Millions daily | Thousands reviewed daily |

| Accuracy | Varies; includes false reports | High; based on Facebook policies |

| Response Time | Immediate report submission | Within 24-48 hours |

| Outcome | Triggers review process | Content removal, warnings, or no action |

| Impact | Highlights potential policy violations | Enforces Facebook community standards |

Introduction to Facebook Content Moderation

Facebook content moderation employs advanced algorithms and a global network of human reviewers to identify and remove harmful or inappropriate material. The process prioritizes the enforcement of community standards, ensuring user safety by filtering hate speech, misinformation, and graphic content. Continuous updates to moderation policies respond to emerging threats and platform dynamics to maintain a secure online environment.

Understanding Facebook User Reports

Facebook user reports play a crucial role in maintaining platform safety by allowing users to flag content that violates community standards, such as hate speech, harassment, or misinformation. Analyzing these reports helps Facebook prioritize content review and enforce policies effectively, reducing harmful interactions and improving user experience. Patterns in user reports also enable the platform to enhance automated detection algorithms and update its content moderation strategies.

The Role of Moderators in Facebook

Moderators on Facebook play a crucial role in maintaining community standards by reviewing user-generated content and enforcing platform policies to prevent misinformation, hate speech, and harmful behavior. Your experience on Facebook depends heavily on their effectiveness in creating a safe environment that encourages respectful interactions and content relevance. By ensuring compliance with Facebook's guidelines, moderators help protect users from spam, scams, and other malicious activities that degrade the quality of social media engagement.

User Reports vs Moderator Decisions: Key Differences

User reports on social media platforms typically highlight content perceived as inappropriate or violating community guidelines, serving as the initial trigger for moderation actions. Moderator decisions involve a thorough review process based on platform policies, context, and severity, ensuring consistent enforcement and maintaining community standards. The key difference lies in the source and nature of evaluation: user reports reflect subjective concerns, while moderator decisions apply objective criteria for content management.

Types of Content Flagged on Facebook

Facebook primarily flags content related to hate speech, misinformation, and violent or graphic material to maintain community standards. Posts containing harassment, spam, or false news reports are frequently identified and removed to protect users from harmful interactions. Content promoting terrorism, nudity, or self-harm also falls under strict monitoring to prevent negative impacts on the platform's safety and integrity.

Accuracy and Efficiency of User Reporting

User reporting on social media platforms relies heavily on accuracy and efficiency to quickly identify and address harmful content. Accurate reports enable algorithms and moderators to prioritize genuine violations, reducing false positives and ensuring timely interventions. Your prompt and precise reporting helps maintain a safer and more reliable online community by streamlining the moderation process.

Challenges Faced by Facebook Moderators

Facebook moderators encounter significant challenges such as exposure to graphic content that can impact mental health and cause trauma. They often face the difficulty of maintaining consistent enforcement of community standards across diverse cultural contexts and rapidly evolving online behaviors. The pressure to balance thorough content review with the demands for quick decision-making leads to high stress and burnout rates among moderators.

Appeals and Disputes in Content Moderation

Content moderation on social media platforms faces complex appeals and disputes processes designed to balance user rights and community standards enforcement. Appeals mechanisms enable users to challenge content removal, fostering transparency and accountability while minimizing wrongful censorship. Disputes often arise due to subjective interpretations of policies on hate speech, misinformation, and harassment, requiring dynamic algorithms and human reviewers to ensure fair outcomes.

Impact of Moderation on User Experience

Effective social media moderation directly influences user experience by fostering safer, more respectful online communities and reducing exposure to harmful content. By implementing clear guidelines and employing advanced moderation tools, platforms can enhance user trust and encourage positive interactions. Your engagement increases when the digital environment feels supportive and free from spam, abuse, or misinformation.

Future of Content Moderation on Facebook

The future of content moderation on Facebook emphasizes advanced AI algorithms and machine learning to detect harmful content with higher accuracy and speed. Your online safety will be enhanced through real-time monitoring and proactive removal of misinformation, hate speech, and violent material. Facebook's evolving moderation tools aim to balance user expression with community standards to foster a safer digital environment.

socmedb.com

socmedb.com