Photo illustration: YouTube spam bot vs spam filter

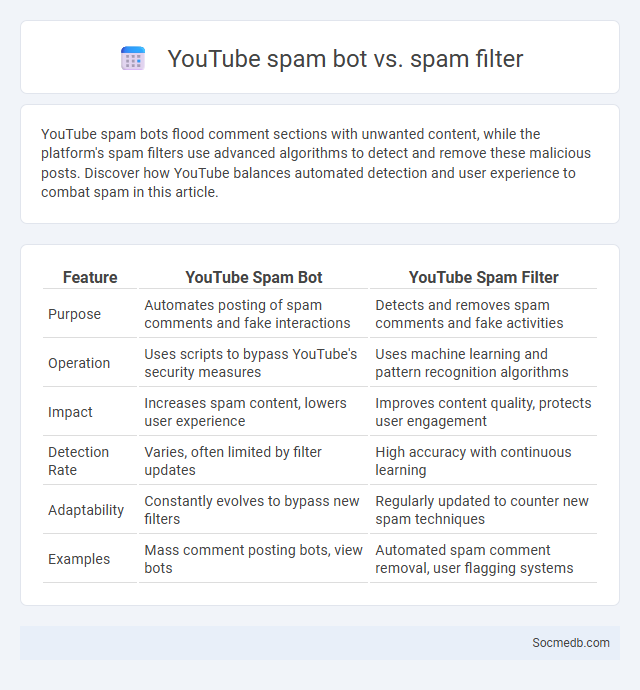

YouTube spam bots flood comment sections with unwanted content, while the platform's spam filters use advanced algorithms to detect and remove these malicious posts. Discover how YouTube balances automated detection and user experience to combat spam in this article.

Table of Comparison

| Feature | YouTube Spam Bot | YouTube Spam Filter |

|---|---|---|

| Purpose | Automates posting of spam comments and fake interactions | Detects and removes spam comments and fake activities |

| Operation | Uses scripts to bypass YouTube's security measures | Uses machine learning and pattern recognition algorithms |

| Impact | Increases spam content, lowers user experience | Improves content quality, protects user engagement |

| Detection Rate | Varies, often limited by filter updates | High accuracy with continuous learning |

| Adaptability | Constantly evolves to bypass new filters | Regularly updated to counter new spam techniques |

| Examples | Mass comment posting bots, view bots | Automated spam comment removal, user flagging systems |

Understanding YouTube Spam Bots

YouTube spam bots flood video comments and messages with irrelevant links and promotional content, undermining genuine user engagement. These automated programs use algorithms to mimic human behavior, making them difficult to detect while spreading misleading or harmful material. Protecting Your channel requires implementing advanced filters and reporting suspicious activity to maintain a safe and authentic community experience.

How Spam Filters Work on YouTube

Spam filters on YouTube analyze video comments, descriptions, and user interactions to identify and block content that contains malicious links, repetitive messages, or promotional spam. These filters use machine learning algorithms trained on vast datasets to detect patterns commonly associated with spam, ensuring a safer and more engaging community environment. Understanding how spam filters work can help you create legitimate content and avoid automatic flagging or removal of your videos.

Key Differences Between Spam Bots and Spam Filters

Spam bots generate unsolicited messages and fake accounts on social media platforms, overwhelming users with irrelevant content and potentially compromising account security. Spam filters analyze incoming messages and user activity using algorithms and machine learning to detect and block spam, thereby protecting your social media experience. Understanding these key differences allows you to better manage and secure your online presence against unwanted digital disruptions.

Common Tactics Used by YouTube Spam Bots

YouTube spam bots commonly use tactics such as automated commenting, mass liking, and rapid subscription to channels in order to manipulate engagement metrics and promote external links or malicious content. These bots often deploy repetitive keywords and irrelevant hashtags to increase video visibility and disrupt genuine user interactions. Detecting these activities requires analyzing patterns like unnatural comment frequency, generic account profiles, and coordinated behavior across multiple accounts.

The Role of Automated Spam Detection

Automated spam detection utilizes machine learning algorithms and natural language processing techniques to identify and filter out harmful, irrelevant, or malicious content on social media platforms such as Facebook, Twitter, and Instagram. This technology improves user experience by reducing the prevalence of spammy messages, phishing attempts, and fake accounts, thereby enhancing platform security and content quality. Advanced systems analyze patterns like posting frequency, message similarity, and user behavior to proactively block or flag suspicious activity in real-time.

Challenges in Blocking YouTube Spam Bots

YouTube spam bots exploit platform algorithms to generate fake views, comments, and subscriber counts, undermining genuine engagement and content credibility. Effective blocking requires advanced machine learning models that can differentiate between human behavior and automated activity in real-time. However, the rapid evolution of bot tactics and the volume of traffic make it difficult for content moderators and automated systems to maintain consistent detection accuracy.

How Users Can Report and Avoid Spam Bots

Users can report spam bots on social media platforms by accessing the report feature typically found in the settings or menu of suspect profiles or messages. Avoiding spam bots involves not clicking on suspicious links, refraining from sharing personal information, and regularly updating privacy settings to filter out automated accounts. Social media companies use algorithms and user reports to identify and remove spam bots, enhancing overall platform security and user experience.

Recent Updates to YouTube’s Spam Defense

YouTube's recent updates to its spam defense include enhanced machine learning algorithms designed to detect and remove spam comments more efficiently, improving content quality for creators and viewers. These improvements target repetitive and misleading messages, reducing the visibility of spammy content across the platform. By strengthening spam filters, YouTube helps protect your channel's engagement and ensures a safer community experience.

The Impact of Spam Bots on YouTube Communities

Spam bots on YouTube disrupt authentic community engagement by flooding comment sections with irrelevant or malicious content, leading to decreased user trust and interaction. These automated accounts can manipulate video metrics, skewing algorithmic recommendations and harming content creators' visibility and revenue. Addressing spam bot activity is crucial for maintaining a healthy digital environment and ensuring genuine audience connection within YouTube communities.

Future Trends in YouTube Spam Prevention

Future trends in YouTube spam prevention will heavily rely on advanced machine learning algorithms that detect and filter out spam comments, fake accounts, and misleading content with greater accuracy. Enhanced AI-powered moderation tools will enable real-time identification of suspicious behavior patterns, protecting creators and viewers from spammy interactions. You can expect ongoing improvements in community guidelines enforcement, leveraging user feedback and automated systems to maintain a safer, more authentic user experience.

socmedb.com

socmedb.com