Photo illustration: Facebook Moderator vs Content Moderator

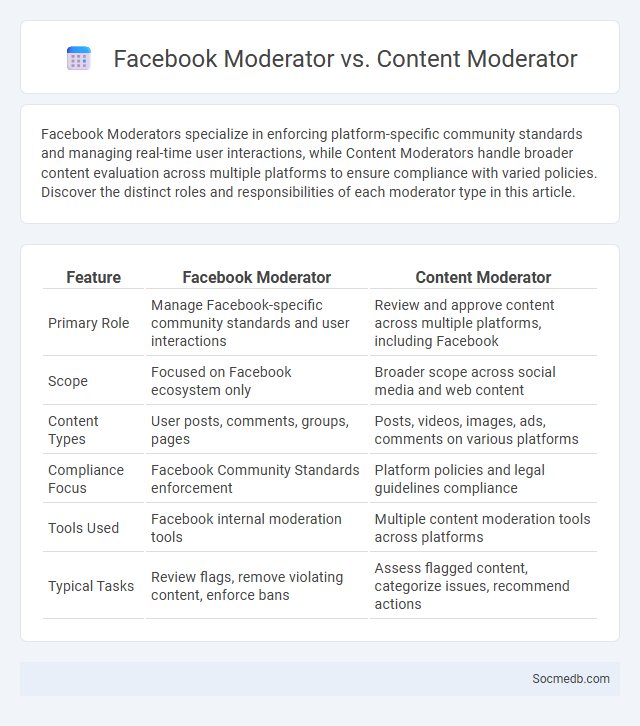

Facebook Moderators specialize in enforcing platform-specific community standards and managing real-time user interactions, while Content Moderators handle broader content evaluation across multiple platforms to ensure compliance with varied policies. Discover the distinct roles and responsibilities of each moderator type in this article.

Table of Comparison

| Feature | Facebook Moderator | Content Moderator |

|---|---|---|

| Primary Role | Manage Facebook-specific community standards and user interactions | Review and approve content across multiple platforms, including Facebook |

| Scope | Focused on Facebook ecosystem only | Broader scope across social media and web content |

| Content Types | User posts, comments, groups, pages | Posts, videos, images, ads, comments on various platforms |

| Compliance Focus | Facebook Community Standards enforcement | Platform policies and legal guidelines compliance |

| Tools Used | Facebook internal moderation tools | Multiple content moderation tools across platforms |

| Typical Tasks | Review flags, remove violating content, enforce bans | Assess flagged content, categorize issues, recommend actions |

Understanding the Role of a Moderator

A social media moderator plays a crucial role in managing online communities by monitoring content to ensure compliance with platform policies and community guidelines. They filter out harmful or inappropriate material, address user disputes, and foster a positive, engaging environment that aligns with brand values. Effective moderation enhances user experience, reduces the spread of misinformation, and protects the platform's reputation.

What is a Facebook Moderator?

A Facebook Moderator manages online communities by monitoring posts, comments, and interactions to ensure compliance with group rules and Facebook's community standards. They identify and remove harmful content such as spam, hate speech, or misinformation to maintain a positive environment for members. Your role as a Facebook Moderator is essential in fostering engagement and protecting the integrity of the group.

Who is a Content Moderator?

A Content Moderator is a trained professional responsible for reviewing and managing user-generated content on social media platforms to ensure compliance with community standards and legal regulations. They identify and remove harmful, inappropriate, or misleading posts, protecting Your online experience from violations such as hate speech, violence, and misinformation. Their role is crucial in maintaining a safe and trustworthy digital environment for all users.

Defining a General Moderator

A general moderator on social media oversees community interactions, ensuring content aligns with platform guidelines and community standards. Your role involves identifying inappropriate posts, managing user behavior, and fostering a positive online environment. Effective moderation enhances user experience and maintains platform integrity.

Key Responsibilities: Facebook Moderator vs Content Moderator vs Moderator

Facebook Moderators primarily enforce platform-specific community standards by reviewing posts, comments, and profiles to remove harmful or inappropriate content, ensuring user safety and compliance. Content Moderators handle a broader range of digital content across various platforms, focusing on filtering offensive, misleading, or irrelevant material based on diverse guidelines and cultural contexts. Your role as a Moderator involves overseeing online interactions to maintain respectful communication, enforce rules, and protect the community from violations, blending elements from both Facebook and Content Moderators depending on the platform's needs.

Skills Required for Each Moderator Role

Effective social media moderation demands distinct skills tailored to each role, such as strong communication and conflict resolution abilities for community moderators managing user interactions. Content moderators require sharp attention to detail and familiarity with platform policies to identify and remove inappropriate or harmful material quickly. You must also possess technical proficiency for using moderation tools and analytical skills to assess user behavior trends and maintain a safe online environment.

Platform-Specific Duties and Challenges

Social media managers face platform-specific duties such as curating visually appealing content for Instagram, engaging in real-time conversations on Twitter, and creating video-centric posts for TikTok. Each platform's unique algorithm demands tailored strategies to optimize reach, requiring continuous adaptation to updates and user behavior shifts. The challenge lies in maintaining brand consistency while addressing distinct audience preferences across Facebook, LinkedIn, Snapchat, and emerging platforms.

Differences in Tools and Technologies Used

Social media platforms employ diverse tools and technologies to create unique user experiences, such as Instagram's use of visual filters and Stories features contrasting with Twitter's real-time text updates and hashtag trends. Advanced algorithms personalize content feeds based on user behavior, while backend technologies like cloud storage and AI-driven moderation systems ensure scalability and safety. Understanding these technological differences helps you choose the best platform for your content and engagement goals.

Impact on Online Community Safety

Social media platforms significantly shape online community safety by influencing user behavior and content moderation policies. Algorithmic recommendation systems often amplify both positive interactions and harmful content, making the regulation of misinformation and cyberbullying critical. Enhanced machine learning techniques and community reporting tools are essential for creating safer digital environments and protecting vulnerable populations.

Career Pathways and Opportunities in Moderation

Social media moderation offers diverse career pathways including content reviewer, community manager, and digital compliance analyst, each requiring expertise in platform policies and user engagement. Growing demand in tech companies, marketing firms, and governmental agencies highlights opportunities for specialists skilled in AI tools and crisis management. Continuous learning in digital ethics and communication strategies enhances job prospects and career advancement in this evolving field.

socmedb.com

socmedb.com